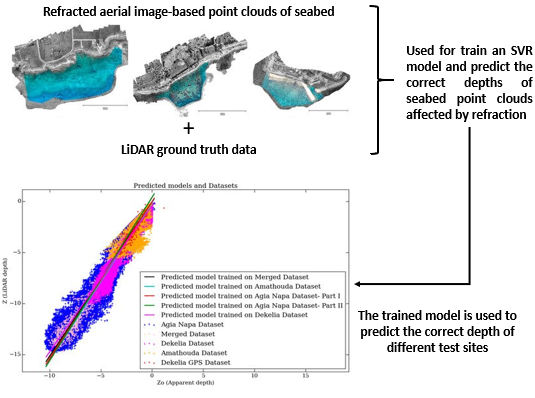

Figure 1. DepthLearn's concept We employ machine learning tools, which are able to learn the systematic underestimation of the estimated depths in order to deliver accurate bathymetric information |

The determination of accurate bathymetric information is a key element for near offshore activities; hydrological studies, such as coastal engineering applications, sedimentary processes, hydrographic surveying, archaeological mapping and biological research. Through structure from motion (SfM) and multi-view-stereo (MVS) techniques, aerial imagery can provide a low-cost alternative compared to bathymetric LiDAR (Light Detection and Ranging) surveys, as it offers additional important visual information and higher spatial resolution. Nevertheless, water refraction poses significant challenges on depth determination. Till now, this problem has been addressed through customized image-based refraction correction algorithms or by modifying the collinearity equation. In this article, in order to overcome the water refraction errors in a massive and accurate way, we employ machine learning tools, which are able to learn the systematic underestimation of the estimated depths. In particular, an SVR (support vector regression) model was developed, based on known depth observations from bathymetric LiDAR surveys, which is able to accurately recover bathymetry from point clouds derived from SfM-MVS procedures. Experimental results and validation were based on datasets derived from different test-sites, and demonstrated the high potential of our approach. Moreover, we exploited the fusion of LiDAR and image-based point clouds towards addressing challenges of both modalities in problematic areas.

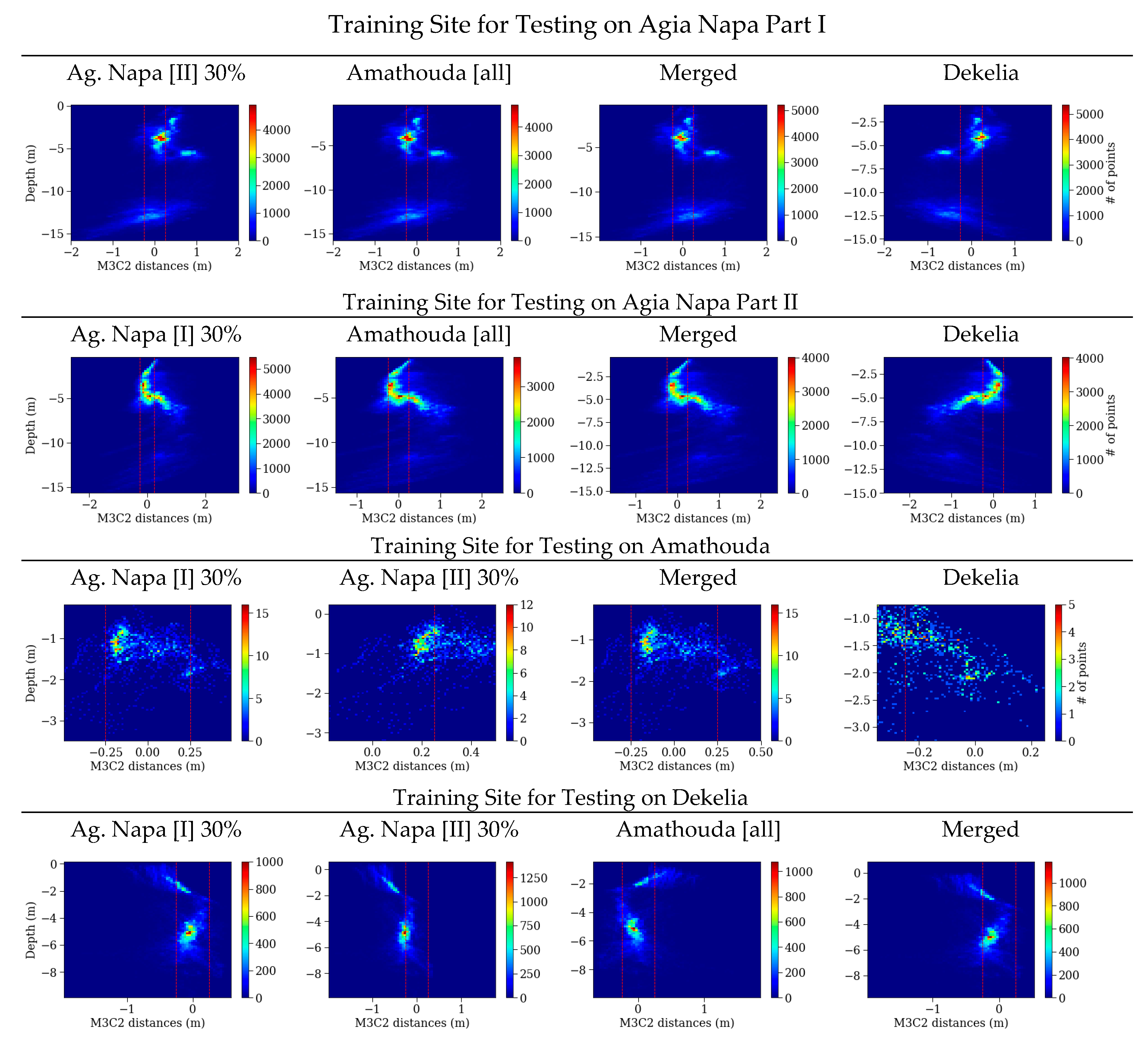

Figure 2. Mean distances. The histograms of the M3C2 distances between the LiDAR point cloud and the corrected point clouds after the application of the proposed approach in relation to the real depth. The red dashed lines represent the accuracy limits generally accepted for hydrography, as introduced by the International Hydrographic Organization (IHO). |

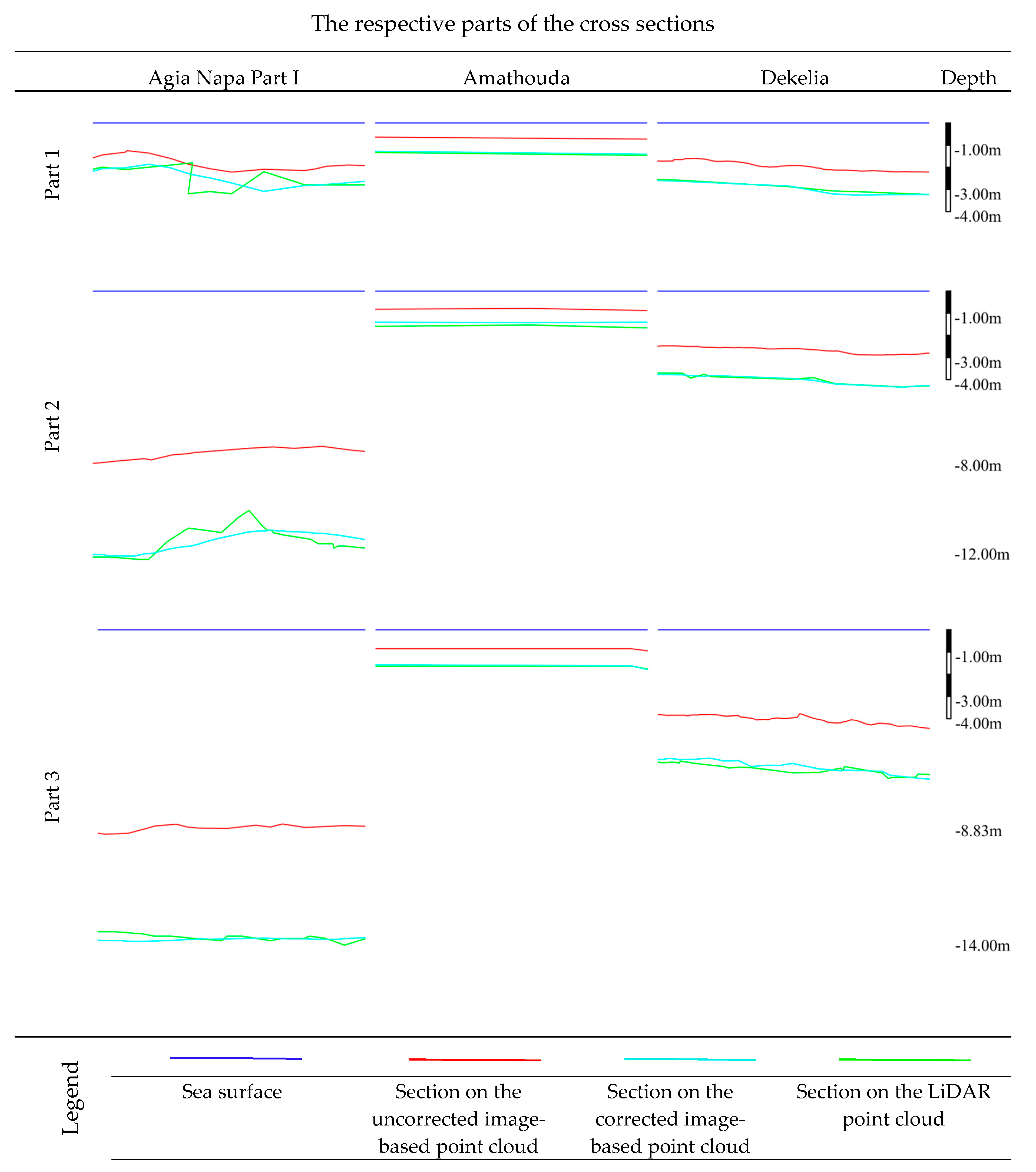

Figure 3. Cross-sections. Indicative parts of the cross-sections (X and Y axes have the same scale) from the Agia Napa (Part I) region after the application of the proposed approach when trained with 30% from the Part II region (first column); the Amathouda test site was predicted with the model trained on Dekelia (middle column); and the Dekelia test site with the model trained on Merged dataset (right column). |

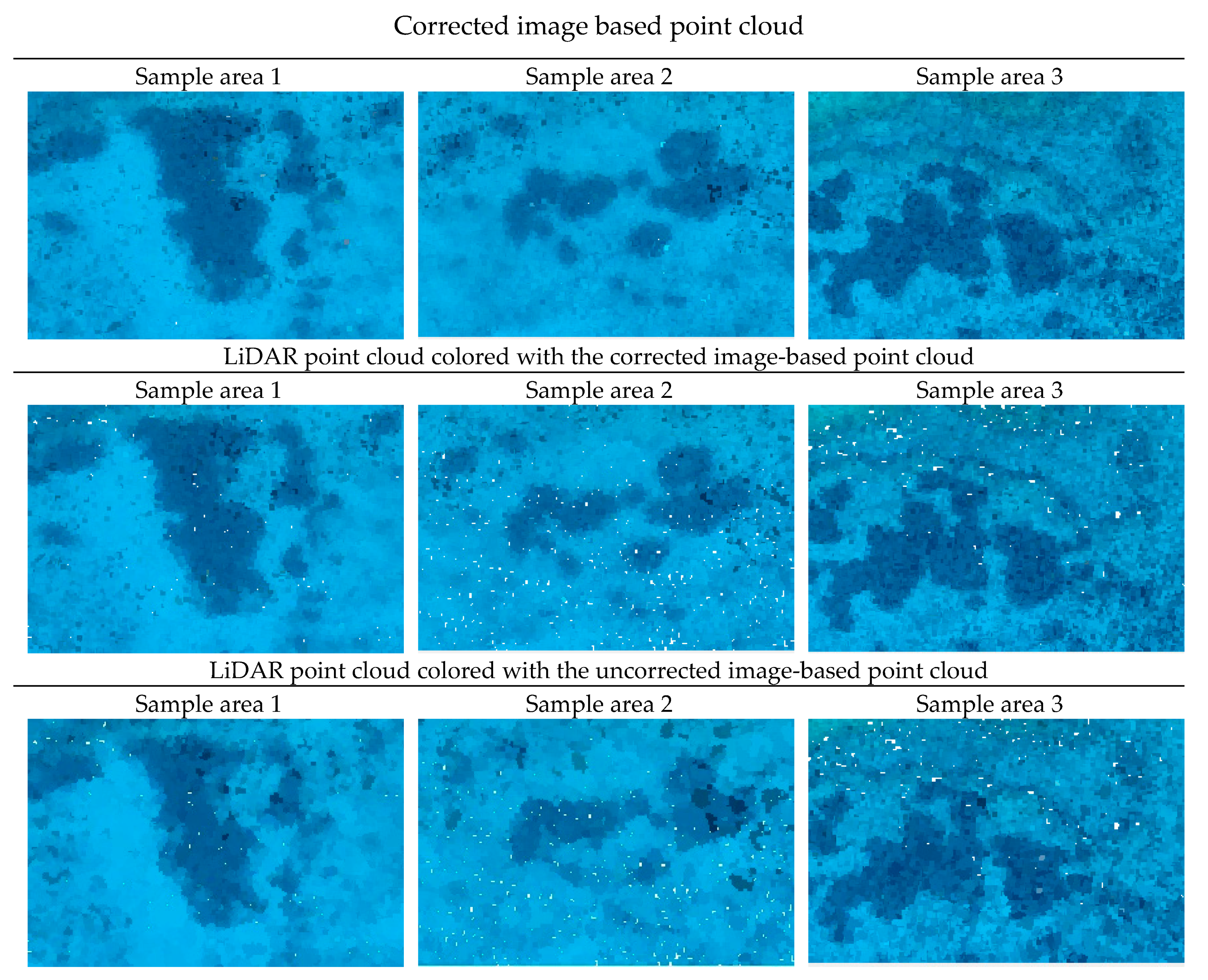

Figure 4. Fusion results. Three different sample areas of the Agia Napa seabed with depths between 9.95 m to 11.75 m. The first two columns depict two sample areas of the Agia Napa (I) seabed, while the third depicts a sample area of the Agia Napa (II) seabed. The first row depicts the three areas on the image-based point cloud; the second row, image on the LiDAR point cloud colored with the corrected image-based point cloud; and the third row depicts the three areas on the LiDAR point cloud, colored using the uncorrected image-based one. Point size is multiplied by a factor of four in order to facilitate visual comparisons. |